ToolFormer, Finetune LLM for API calls

The integration of Function/API calling represents a significant advancement for Large Language Models (LLMs), enabling them to access capabilities such as data retrieval, complex computation, and specialized analytics. These functionalities go beyond what LLMs can perform natively.

Take the computational limitations of standard LLMs as an example. Performing a calculation like 12345 * 67890 may be challenging for them. However, by leveraging an external calculator through API calling, the LLM can easily obtain accurate results.

Despite these advancements, integrating APIs presents its own set of challenges. One of the primary questions is how to enable the LLM to recognize

- when to initiate an API call,

- which specific API to call, and

- what parameters to pass into it.

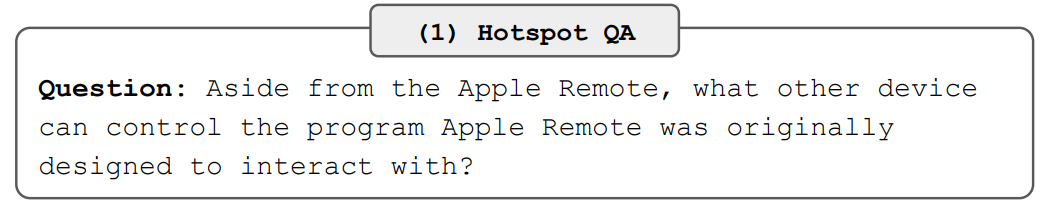

A straightforward solution to this problem is using few-shot prompting techniques, as demonstrated in the ReAct framework (https://arxiv.org/pdf/2210.03629.pdf). Within this framework, the LLM is asked to generate both ‘Thoughts’ and ‘Acts’, where the ‘Acts’ specifies the API to be called along with its requisite input parameters. The following example is from the ReAct paper. According to Act 1, the Search is the API to be called with the parameter ‘Apple Remote’.

Toolformer: a solution of fine-tuning

Another effective strategy for enhancing the decision-making capabilities of Large Language Models (LLMs) in API utilization is through fine-tuning. Toolformer serves as a specialized method designed for this purpose.

The primary objective of Toolformer is to fine-tune an LLM so it can perform the following sequence of actions:

- During inference, if the model determines that an external API is needed, it generates a token in the format [API(input_of_api)->.

- Upon encountering the ‘->’ token, the model’s token generation is halted.

- An external API call is initiated with the specified input_of_api, and the output_of_api is obtained.

- This output_of_api is appended to the halted token sequence, resulting in the structure [API(input_of_api)-> output_of_api].

- Token generation resumes, following the newly appended output_of_api.

To illustrate this process, consider the query, “Where was Joe Biden born?” The intended behavior of the LLM using Toolformer would proceed as follows:

- The LLM initially generates: “Joe Biden was born in [QA(‘where was Joe Biden born?’)->”.

- Token generation is then paused.

- An external API call to QA(‘where was Joe Biden born?’) returns “Scranton” as the output.

- “Scranton” is appended to the halted token sequence, resulting in “Joe Biden was born in [QA(‘where was Joe Biden born?’)-> Scranton]”.

- Token generation resumes, following the completed sequence.

Toolformer training procedure

The training process for Toolformer is organized into the following steps:

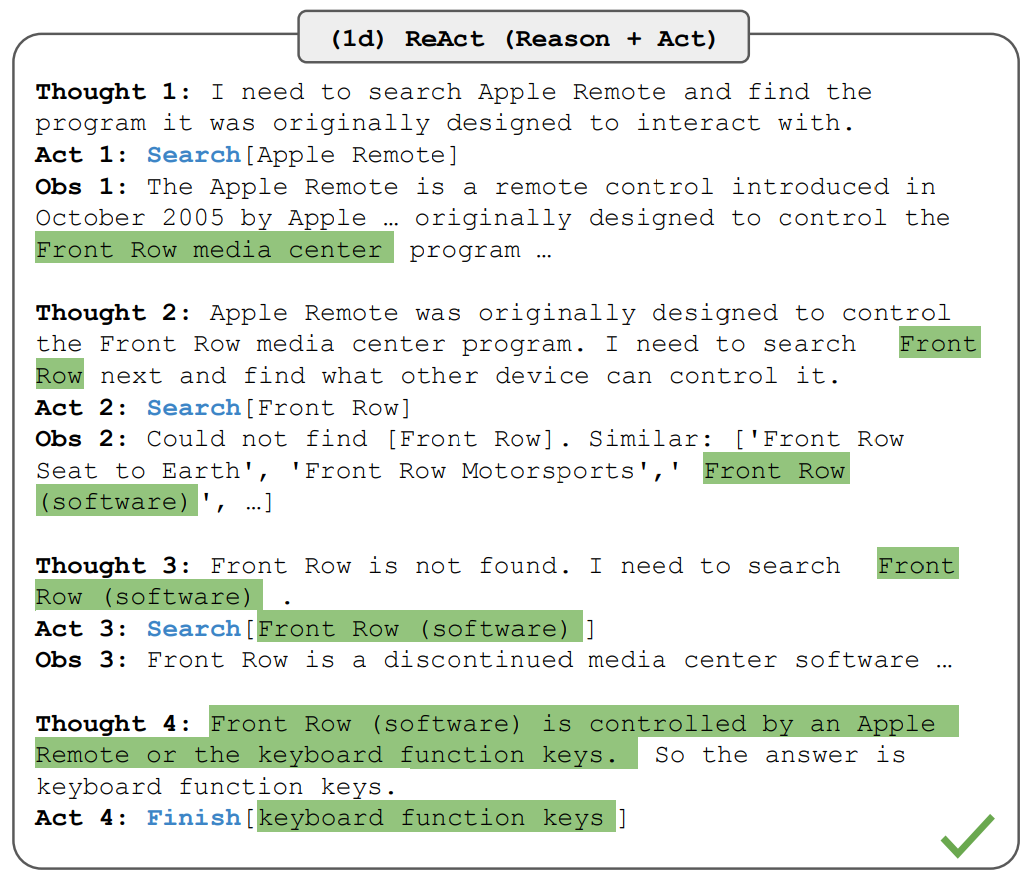

- Sampling potential API calls. Firstly, a pre-trained language model is employed to annotate a dataset using a few-shot learning approach, illustrating examples of API call usage.

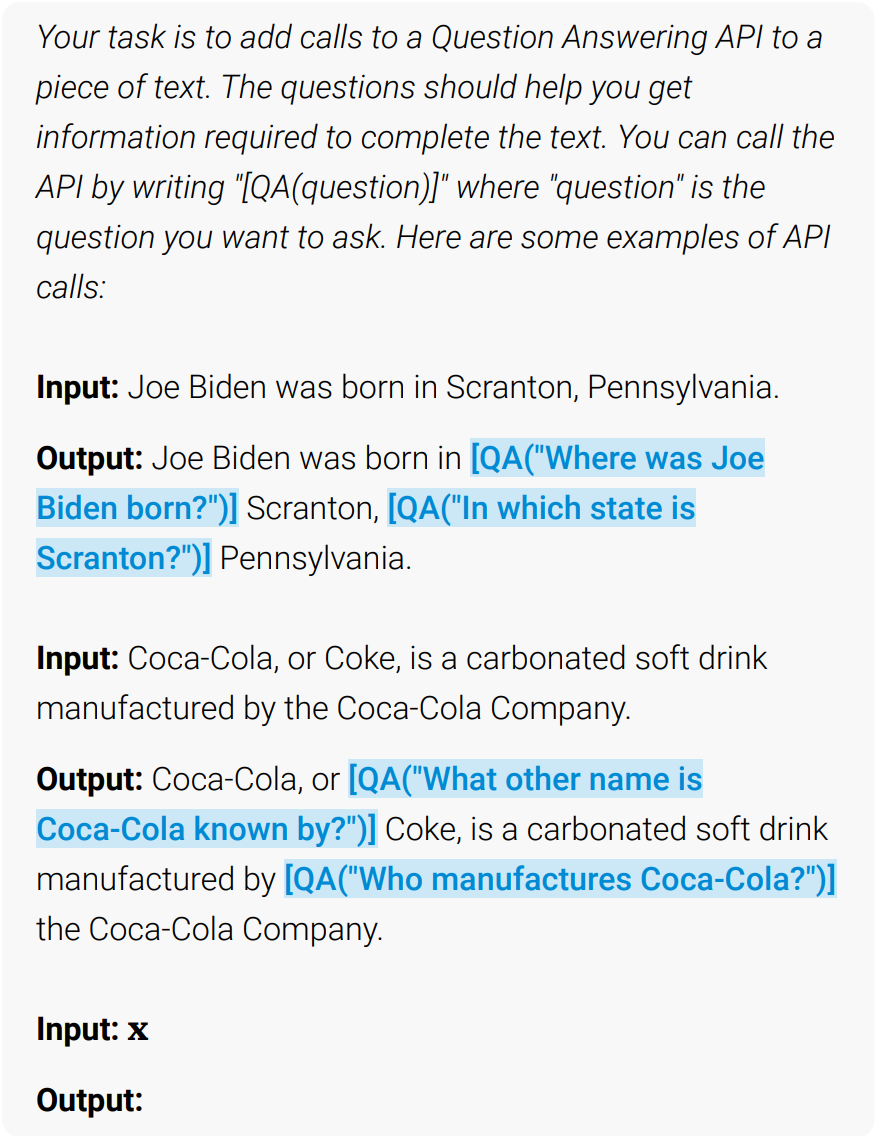

- Executing API calls. All the API calls generated by the model are executed to obtain the corresponding results. The method of execution depends on the specific API, which could involve calling another neural network, running a Python script, or using a retrieval system. Here are the examples of API calls in the Toolformer paper:

- Filtering API Calls. This step is to check whether the obtained responses are helpful for predicting future tokens, which is used as a filtering criterion. An API call is considered useful if it reduces the loss by at least a threshold.

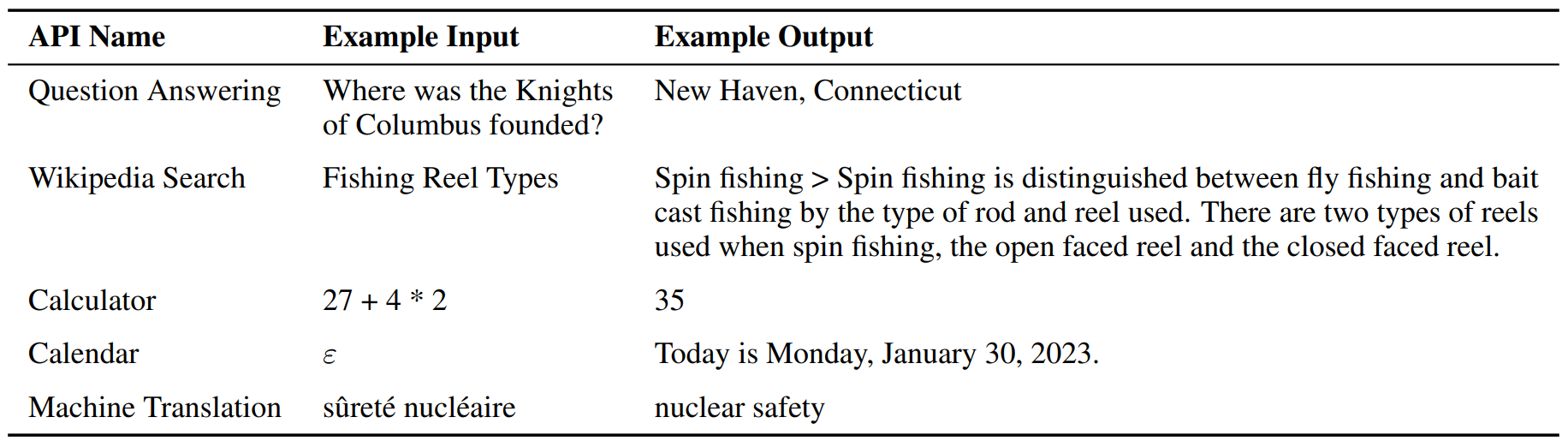

- LLM Finetuning. After filtering, we merge API calls for different tools, resulting in the augmented dataset, and finetune LLM itself on the augmented dataset. Here are the examples of texts in augmented dataset.

- Pittsburgh is also known as [QA(What other name is Pittsburgh known by?) → Steel City] the Steel City.

- Joe Biden was born in [QA(“Where was Joe Biden born?”) → Scranton] Scranton.

- The answer of 12345 * 67890 is [Calculator(12345 * 67890) →838,102,050] 838,102,050

The advantage of Toolformer is that the approach is self-supervised and does not require large amounts of human annotations. It allows the model to decide for itself when and how to use which tool, making it highly flexible and generalizable.

References

-

Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K., & Cao, Y. (2022). React: Synergizing reasoning and acting in language models. arXiv preprint arXiv:2210.03629.

-

Schick, T., Dwivedi-Yu, J., Dessì, R., Raileanu, R., Lomeli, M., Zettlemoyer, L., … & Scialom, T. (2023). Toolformer: Language models can teach themselves to use tools. arXiv preprint arXiv:2302.04761.